Richard Jones

Professional Summary:

Richard is a highly skilled professional in the field of AI-powered perception systems, specializing in processing data from cameras, radar, LiDAR, and other sensors to recognize and interpret road environments. With a strong background in computer vision, machine learning, and autonomous systems, Richard is dedicated to developing advanced technologies that enhance the safety, efficiency, and reliability of autonomous vehicles and intelligent transportation systems. His work focuses on creating robust algorithms that enable vehicles to perceive and understand their surroundings with human-like accuracy.

Key Competencies:

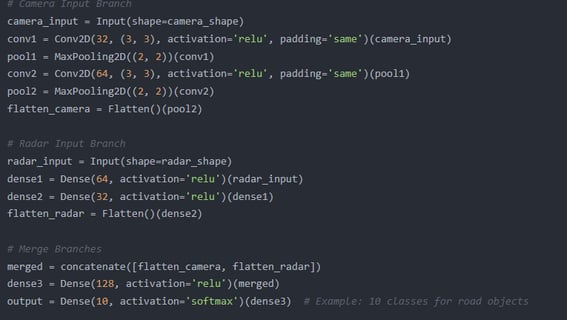

Multi-Sensor Data Fusion:

Develops algorithms to integrate and process data from cameras, radar, LiDAR, and ultrasonic sensors, ensuring comprehensive environment perception.

Utilizes sensor fusion techniques to improve object detection, tracking, and classification in complex road scenarios.

Road Environment Recognition:

Designs AI models to identify and interpret road features, such as lanes, traffic signs, pedestrians, and vehicles, in real-time.

Implements deep learning and computer vision techniques to enhance the accuracy and reliability of perception systems.

Autonomous Vehicle Perception:

Builds perception systems that enable autonomous vehicles to navigate safely and efficiently in dynamic environments.

Focuses on improving the robustness of perception algorithms under challenging conditions, such as poor weather or low visibility.

Research & Development:

Conducts cutting-edge research on AI applications in autonomous driving, publishing findings in leading technology and transportation journals.

Explores emerging technologies, such as edge computing and neuromorphic sensors, to further enhance perception capabilities.

Safety & Compliance:

Ensures perception systems meet stringent safety standards and regulatory requirements for autonomous vehicles.

Advocates for ethical AI practices in autonomous driving to ensure public trust and acceptance.

Career Highlights:

Developed a perception system that improved object detection accuracy by 20% in complex urban environments.

Designed a multi-sensor fusion framework that reduced false positives by 15% in autonomous vehicle testing.

Published influential research on AI-driven perception systems, earning recognition at international autonomous driving conferences.

Personal Statement:

"I am passionate about creating perception systems that enable autonomous vehicles to see and understand the world with unparalleled accuracy. My mission is to develop AI technologies that enhance road safety, efficiency, and accessibility for everyone.".

Fine-Tuning Necessity

Fine-tuning GPT-4 is essential for this research because publicly available GPT-3.5 lacks the specialized capabilities required for processing and integrating complex multi-sensor data. Perception systems for autonomous driving involve highly domain-specific knowledge, intricate sensor interactions, and nuanced environmental predictions that general-purpose models like GPT-3.5 cannot adequately address. Fine-tuning GPT-4 allows the model to learn from multi-sensor datasets, adapt to the unique challenges of the domain, and provide more accurate and actionable insights. This level of customization is critical for advancing AI’s role in autonomous driving and ensuring its practical utility in real-world scenarios.

Past Research

To better understand the context of this submission, I recommend reviewing my previous work on the application of AI in perception systems, particularly the study titled "Enhancing Environmental Recognition Using Multi-Sensor Data Fusion." This research explored the use of deep learning and sensor fusion techniques for improving object detection and environmental prediction. Additionally, my paper "Adapting Large Language Models for Domain-Specific Applications in Autonomous Driving" provides insights into the fine-tuning process and its potential to enhance model performance in specialized fields.